Minicomputer System manufactured by Data General Corporation

One of the most revolutionary computer products made in the 1980s was the Inmos transputer. It was oddly named, in my opinion, but it was the first microprocessor chip specifically designed to be used as a node in a multiprocessor computer system. The company originated in the United Kingdom, and was purchased by SGS-Thomson in 1989.

Some innovations come and go, but this one was truly a permanent paradigm shift. The technologies and philosophies introduced by Inmos pioneered parallel processing, and are still in use today. The fastest and most powerful supercomputers still use the same approaches developed by Inmos. Even the dual-core and multi-core processors in your laptop computer owe a nod to the transputer.

Setting the Stage

The early 1980s was a time of relatively uninspiring incremental improvements in computer technologies. Minicomputers still ran the world's business and microcomputers were still novelties. Apple had great success with their Apple II in the 1970s, but in 1981, the introduction of the IBM PC dramatically eroded Apple sales. The IBM was a competent microcomputer, but most businesses didn't really know what to do with it. They still depended on minicomputers for most everything.

Apple introduced the Lisa computer in 1983 and then immediately afterward in 1984, they brought out their first Macintosh. Both were based on the Motorola 68000 microprocessor. The 68000 was a great chip, but the operating system and the graphics requirements made both computers pretty slow. These were the first commercially available computers that had a graphical user-interface, so that was revolutionary from a user-interface perspective. But the full user experience wasn't terribly exciting, because the Lisa and Macintosh computers were slow and there was little application support. You couldn't really do much with them.

As an aside, IBM had always made proprietary products and was very protective of their designs and trade secrets. Apple was always open, publishing all their schematics and source code. IBM depended on years of customer-lock through proprietary systems. Apple depended on growth from customer involvement, a sort of "stone soup" approach. It worked well for them in the 1970s, because of a wide variety of user-created software and add-on hardware. Some of Apple's customers became developers, starting a network of cottage industries, all depending on and encouraging Apple's success.

IBM PC |

Apple Macintosh |

So it was somewhat surprising to see a complete role-reversal in these two companies between 1981 and 1983. When Apple introduced the Lisa and Macintosh computers, it stopped publishing technical information and made it proprietary. But IBM published a technical manual for the PC that had a full schematic of the computer and its hardware buss and it included a listing of the assembly language source for the BIOS. As you might expect, this helped IBM to increase its market share in the microcomputer world in the 1980s. It was both open, and it was the big blue giant that all corporate shops trusted.

The operating system for the IBM computer was contracted out to Microsoft, and they didn't get around to creating a popular graphical user-interface until almost 1990. Microsoft did create Windows 1.0 and 2.0 in the mid-1980s, but neither of those ever really caught on. Windows 3.0 was the first to resemble what most people would now recognize as "Windows" and 3.1 was the first to gain widespread acceptance. Windows helped to increase the popularity of the IBM PC and its clones, particularly in offices and business environments. By the late 1980s, PC systems began to replace terminals, but were still connected to minicomputers and mainframe systems for most business computing needs. The software on the PC was seen as a useful novelty, although Word Processing on a PC had started becoming commonplace.

This is the environment that existed when Inmos introduced the transputer.

Running Out the Clock

All computers have a central processing unit, and its speed is largely determined by a central clock, built into the processor circuit. All memory access and instruction execution happens during one or more clock cycles. Some processors execute one instruction per clock cycle, others need more than one cycle per instruction. Most can do simple instructions in one cycle and more complicated instructions need several cycles. But the point is that the speed of the processor core is fundamentally set by clock speed.

So the main thing that manufacturers did to increase computer speed - prior to around 1985 - was to make circuits that could operate at faster clock rates. The problem is, of course, that there comes a point where you can't run the clock any faster. Physical limits prevent it. Increasing speed takes more power and raises temperature because of a property called reactance. At some point you reach an impossible barrier because of hard limits like the speed of light. Signal velocity is somewhat close to the speed of light, but cannot exceed it. So this sets an upper limit for a set distance, the distances found within a circuit.

Naturally, another direction needed to be investigated to get further speed improvements. Different architectures were investigated by various universities and manufacturers. The traditional computer used a von Neumann architecture, having program and data stored in the same memory, and accessed on the same buss. Each instruction is read from memory, decoded and executed. During the decode state, any operands needed are fetched from memory. It's a pretty simple architecture, but to increase speed, more complex approaches were sought.

One of the first alternatives was Harvard architecture. It's actually about as old as von Neumann in principle, but since it's more expensive to build, most early computers were von Neumann designs. Harvard architecture separated instructions and data in memory, having physically separate address and data busses for instructions and for data. This allows instructions and operands to be fetched simultaneously. Some "Super Harvard" processors provided multiple busses for a number of simultaneous data paths. This was an early parallel processing mechanism.

Another focus was on instruction set size and word length. They are two different things, but both have been explored by manufacturers and continue to be optimized. Instruction sets can be grouped into those that can be performed in a single cycle or those that require multiple cycles to execute. And processor word length is steadily increasing in the quest for performance too.

RISC Processors - reduced instruction set computers - use a small and simple instruction set that allows every instruction to be executed in the same amount of time. This allows a pipelined approach of instruction fetching and decoding, since the processor can be expected to perform every operation in the same amount of time. Pipelining can dramatically increase execution speed.

CISC Processors - complex instruction set computers - support a large number of instructions which fetch one or more operands and do one or more operations on those operands. They can do more operations with each instruction, so executable code is denser and programs reside in less memory. But the execution of a multi-cycle instruction makes all other instructions behind it have to wait.

Word length - 8-bits, 16-bits, onward and upward - Increasing word length provides a performance increase because larger operands can be fetched, decoded and processed in a single machine cycle. Increasing word length increases the capability to process larger units of data. A small word length sometimes requires multiple arithmetic operations to deal with overflows, whereas a longer word allows larger numbers to be computed without overflow and carry.

VLIW - very large instruction word - processors have multiple (ALU) arithmetic/logic units in parallel. These architectures have been designed to exploit "instruction level parallelism" where each ALU can be loaded in parallel.

SIMD - single instruction multiple data - architectures have multiple ALUs, but the design approach is optimized for situations where the same instruction can be executed on all the ALUs simultaneously. The data inputs to the ALU can be different. So an SIMD processor is specifically designed for tasks where operations must be performed on multiple data blocks, and exploits "data level parallelism."

Super-scalar architectures are similar to VLIW architectures in that they have multiple ALUs. But super-scalar processors employ dynamic scheduling of instructions. These architectures have special hardware to determine the interdependency of instructions and data, and to schedule execution on different ALUs.

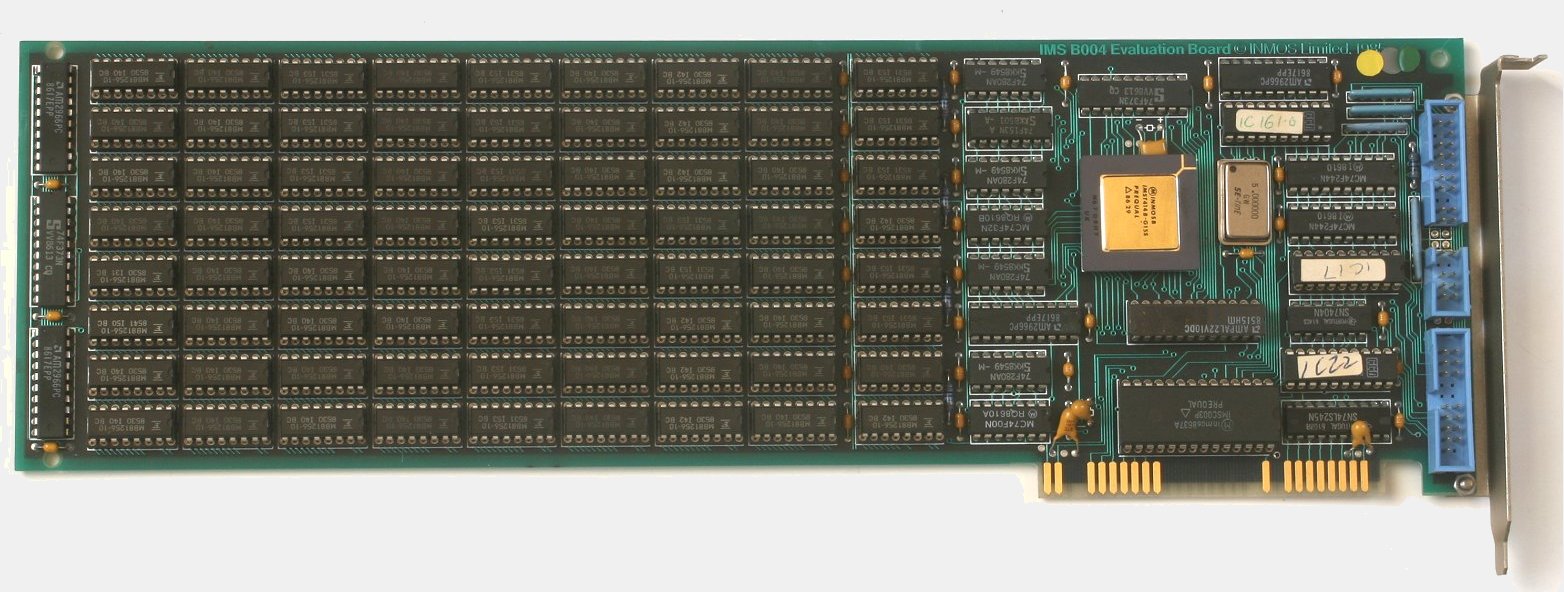

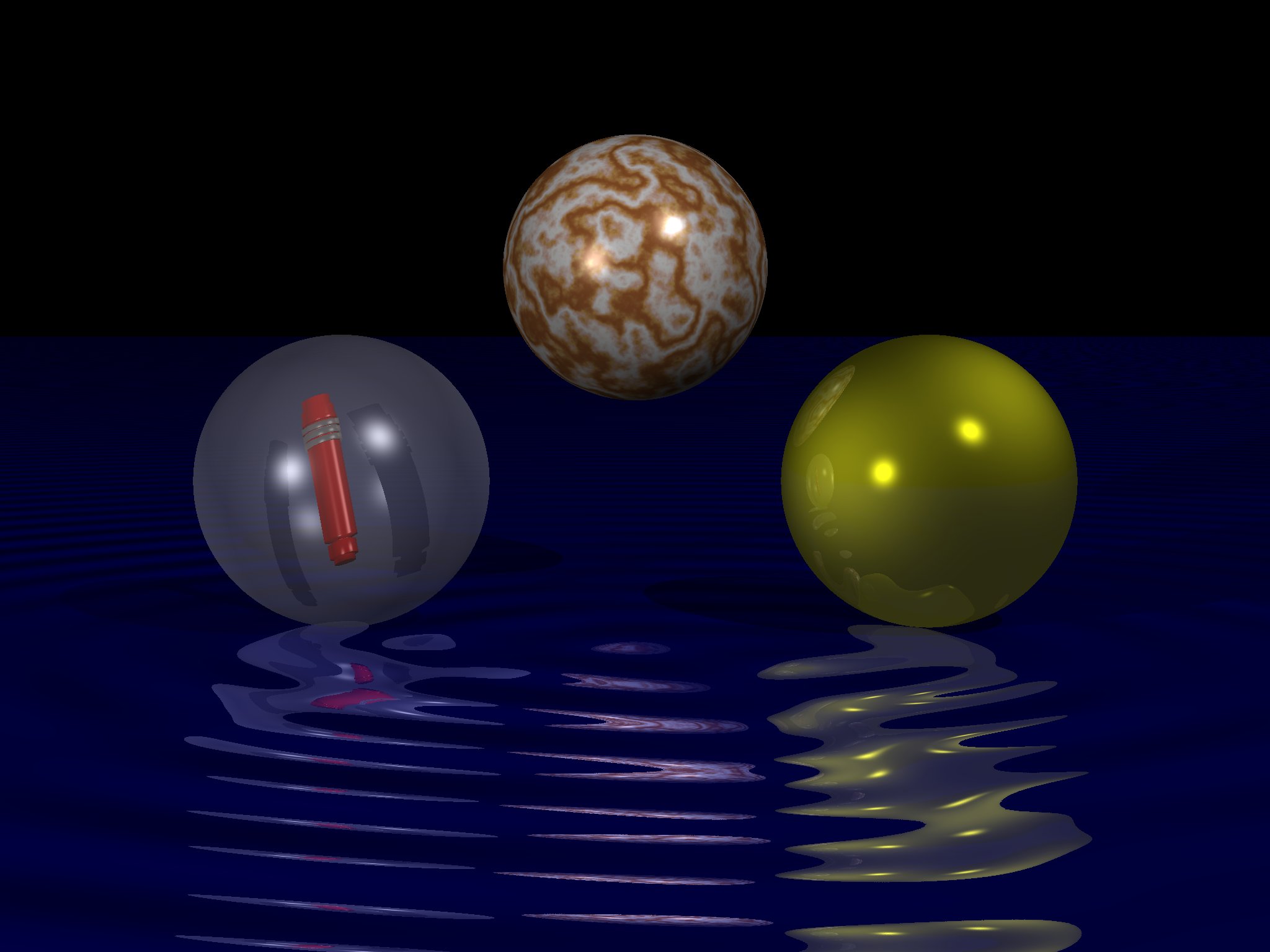

The "Eureka Moment" - Multiple Processors You will notice that several architectural improvements listed above exploit parallelism in some form. If you want to do a lot of stuff real fast, you can either have one fast thing or several slower things operating at the same time. Eureka! Run several processors! That's exactly what Inmos set about doing. It designed a series of microprocessors specifically designed to be used in a multiprocessing system. Each processor had four built-in high-speed interfaces to connect with neighbor processors. And each processor was fast, so many of them connected together was amazingly fast. Read these articles to see how the technology progressed: Today's modern multi-core architectures all borrow from this approach, by the way. They are essentially the same thing - multiple CPUs - on a single chip. It allows separate parts of a program to run in separate cores, exploiting "thread level parallelism." The real trick for exploiting multi-processors is proper threading in the operating system and/or application software. Early parallel processors employed relatively primitive load-balancing techniques. They worked best for applications that naturally adapted to parallelism. But that's not always the case, and so modern software has "smart threading" as a primary design goal. And today, everything depends on this. Modern cloud approaches - things like containers and container orchestration systems - have scaling and management features that are there mostly to automate and/or optimize parallelism. Hardware Development I became more and more interested in the Inmos approach in the late 1980s. But one thing I learned in the early 1980s, was to never design a circuit board or a system "just for fun." Or at least, don't make a big investment in an unsolicited project. Make sure to have a buyer before making an investment in printed circuit boards, chassis, and other things. I had learned that lesson early on. Sell the project before building it. But my customers wanted things like analog-to-digital converters, serial-to-parallel converters, and other kinds of protocol converters. That's what I did. I really didn't have a market for parallel processors. So I set about looking for a buyer of such a system. And I found one, sort of. They didn't need a parallel processor, but rather a pretty simple embedded controller. What I decided to do, was to create a PC add-on board that had digital and analog I/O and a T800 transputer, memory and interface. I could sell the board on the I/O features, and have printed circuit boards made that supported the transputer. I designed it as the 8401 Transputer board. My customer wanted a "cooling tower controller." I laughed to myself that I was being paid to design and build a device that needed probably as little technology as I had ever been contracted to design, and it was going to use components that were the highest technology I had ever used. But as long as the board would serve both purposes - to satisfy the customer's needs and to support a transputer node - it was perfect and exactly what I needed. I used a bare-bones industrial PC with my 8401 board for the cooling tower controller. Coded it in C, burned a ROM, done and done. The system sensed temperature and user-control inputs and drove a motor with a leadscrew to move the louvers. The customer board didn't need the transputer section populated, just the I/O section. But for my purposes, I would have a plentiful supply of transputer boards, with development funded by the cooling tower project. I was walking a path that had been previously treaded by giants. Inmos had done all the "heavy lifting," and all I needed to do was to implement what they provided. My system would be elegant and capable, configurable enough to do most anything. But my main objective, really, was to have a supply of printed circuit boards that could be used to support large transputer networks. It was an affordable way to make a massively parallel processor. It was made affordable by piggy-backing the board development costs onto another project. The transputer portion of my 8401 board was essentially an Inmos B004-compatible board, similar to those made by other manufacturers like Computer Systems Architects, Transtech, Parsytec and Microway, among others. The 8401 used eight 51256 memory chips for a total of 1Mb per processor. The processor could be a T400 or T800, but I used T800s. It could have been a half-length card, but the I/O section added a little bit of length so it was designed to be a full size ISA board. I built two prototypes on perf boards with soldered point-to-point wiring, using 22 gauge stranded wire with red insulation. That's just my personal style. The component side layout was very similar to what the PCB was to be but the solder side was a mess of wires. It's a very robust method though, with the prototype board being reliable and durable. Make a prototype or two, then a limited PCB run of maybe 100 units, find any problems in the PCB artwork and correct them on that first run, and then you've got fully-tested artwork and you're ready to make thousands of 'em. I eventually delivered both prototypes to the customer, as I was contractually obligated to do. But I was able to use one as a fully functional proof-of-concept of the cooling tower controller, and to do development and updates on it. The other was used mostly for in-house development of the transputer, PC link and software. The boards were identical, so I could have interchanged them at any time. But the one in the cooling tower controller was installed in a chassis that had connectors on the rear panel for the motor and for sensor inputs. It also had control switches and knobs specific to the cooling tower controller on the front. The transputer development system had none of this, and was basically just a generic PC clone. Software Development While working with the cooling tower manufacturer on their project, I simultaneously promoted transputers to everyone I could find that would listen. Most of my existing customers were manufacturers of industrial or oilwell completions equipment. To be honest, most used their computers for accounting and other typical business uses. Many had need for embedded systems - which was one of my specialties - but neither accounting systems nor embedded systems need to be particularly fast. They didn't need the speed of a multiprocessor. So I was trying to sell to the wrong market. I did have one lucky hit. My first demos used a Mandelbrot set generator, which could be used to illustrate the speed of the transputer compared to other systems. I had also found the POV-Ray open source ray-tracer, and did a port of it that would compile and run on the 8401 board, or any other B004-compatible system, for that matter. Using the Logical Systems C compiler, I was able to build POV-Ray with very few modifications. It just needed some machine-specific code, and it worked right out of the box. I ended up submitting the transputer code to POV-Ray team, and it was included on their bulletin board system and on the distribution CD. This code, by the way, is also included in the transputer archive, listed in the Resources section below. That image was a sample model included with POV-Ray, as I recall. There were three spheres hovering over water. I may have changed the textures of one or two of the spheres, but really, it's a super-simple model that has three spheres positioned "floating" over one plane. The plane has a water texture and the spheres have glass, marble and gold textures. I added one little part - the red thing embedded within the translucent sphere. I used a primitive shareware CSG modeler to create that object and I included it with the model to be rendered. It was my sneaky plan, because I was demonstrating the 8401 system to a customer that made equipment for oil wells that looked a lot like that little red thing. And it worked! They were impressed with my transputer system! But as it turned out, they weren't as impressed with the hardware as they were with the images it could generate. So I had made a sale, but it wasn't for hardware. I sold a contract to create 3D models of their equipment and to use those models to generate animations for demonstrations of their equipment and training videos. What I ended up doing was to cobble together a bunch of software - some that I wrote but most being existing utilities and applications - that could create 3D models and allow me to animate them for rendering. It was a fairly complicated system, because each subsystem was unconnected. The software was all unrelated, with no unifying environment to work under. I was given 2D blueprints as AutoCAD files and I rotated them to create 3D models. I then added details, like the elements (shown in black, above) and the slips (shown in red). I then had to export these models as a series of groups of triangles that formed a surface mesh, and define a name for each group. The group name could be used to provide an offset to its local coordinates in all three planes. This offset indicated movement, and to provide movement, I had to interpolate start-position to end-position over a series of frames. Some parts (groups) moved independently, and some parts moved in unison. All of that had to be choreographed manually, which I did by putting each parts' position into a database, indexed by frame. Every moving part had a position that was recorded by a database entry for each frame. To create an animation, I exported the values for that frame into a text file that became an included file for the model. That then was sent to a transputer node for rendering. The finished output file was in Targa format, and in high-resolution, this provided film-quality animations. At lower resolutions - typically 640 x 480 - it was perfect for computer animations. That was high resolution for PC graphics of the day. One thing that made this project perfect for transputer-based parallel processors in the early 1990s is that ray-tracing is "inherently parallelizable." What I mean by that is it's really easy to break the processing into separate jobs, and to feed parts of it to processing nodes. I simply programmed automation to feed frames to processing nodes as I fetched them - As many nodes as was available. If you have eight nodes, then send eight frames at a time. When one gets done, feed it the next available. You could even break each frame into portions, and send parts of the image to nodes in the array. For example, if you have four nodes, you could send one quarter of each frame to a node: Top-left to node one, top-right to node two, bottom-left to node three and bottom-right to node four. Ray-tracing calculations can be done on a section of a view, and don't require the whole view to be calculated. There is no dependency in the math for one side of the picture to affect the other side of the picture. All the information required to calculate each pixel is in the model, which includes the simulated camera position, the light sources and the objects being rendered. You could literally calculate each pixel by individual processors if you had that many nodes. So I was able to provide this 3D animation service because of my use of Inmos transputers. I didn't sell a bunch of 8401 boards, but I was able to use them to create artwork, animations and multimedia software. I had found a niche in the early 1990s because almost nobody had the processing power to render 3D animations at that time, except maybe Pixar. And that's because they used transputers! Resources

Inmos B004 Transputer Board

POV-Ray ray-tracer image rendered on 8401 transputer system

Mechanically Set Packer

Hydraulically Set Packer

Websites

Parham Data Products